|

Jianshu Hu I'm a PhD student at SJTU Global College in Shanghai, working on sample-efficient and generalizable robot learning. I am currently advised by Prof. Yutong Ban and Prof. Paul Weng. Before my PhD study, I finished my master degree in Upenn GRASP, where I had the honor of being advised by Prof. Michael Posa. |

|

ResearchI'm interested in robot learning and robot manipulation. My current research focuses on improving sample-efficiency and generalization ability of robot learning algorithms by exploiting data augmentation, leveraging pre-trained models, and learning a dynamics model/world model. Some papers are highlighted. |

|

Generalizable Coarse-to-fine Robot Manipulation via Language-aligned 3D Keypoints

Jianshu Hu, Lidi Wang, Shujia Li, Yunpeng Jiang, Xiao Li, Paul Weng†, Yutong Ban† Under Review arXiv, website We introduce a novel coarse-to-fine 3D manipulation policy which outperforms the state-of-the-art method, achieving a 12% higher average success rate with only 1/5 of the training trajectories. In real-world experiments, our method demonstrate strong generalization ability to novel tasks and object variations with only 10 demonstrations per task. |

|

Time Reversal Symmetry for Efficient Robotic Manipulations in Deep Reinforcement Learning

Yunpeng Jiang, Jianshu Hu, Paul Weng†, Yutong Ban† NeurIPS 2025 arXiv, website We propose Time Reversal symmetry enhanced Deep Reinforcement Learning (TR-DRL), a framework that combines trajectory reversal augmentation and time reversal guided reward shaping to efficiently solve temporally symmetric tasks. |

|

Diffusion Stabilizer Policy for Automated Surgical Robot Manipulations

Chonlam Ho* Jianshu Hu*, Hesheng Wang, Qi Dou, Yutong Ban Under Review arXiv Aiming to extend the successes in solving manipulation tasks to the domain of surgical robotics, we propose a diffusion-based policy learning framework, called Diffusion Stabilizer Policy, which enables training with imperfect or even failed trajectories. |

|

State-Novelty Guided Action Persistence in Deep Reinforcement Learning

Jianshu Hu, Paul Weng†, Yutong Ban† Machine Learning Journal arXiv In this paper, we propose a novel method to dynamically adjust the action persistence based on the current exploration status of the state space. |

|

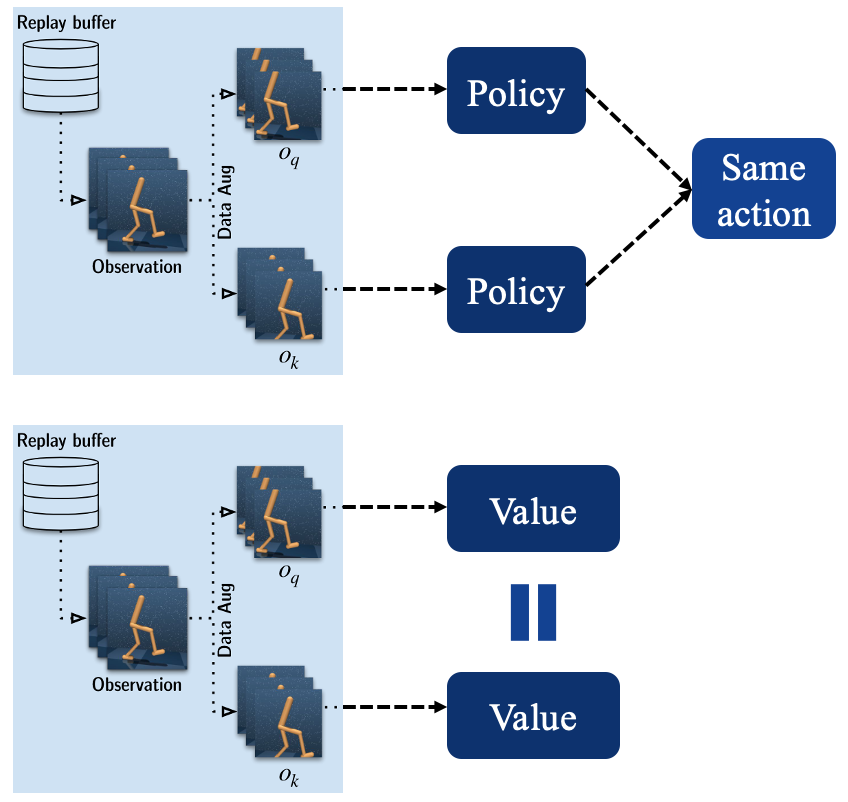

Revisiting Data Augmentation in Deep Reinforcement Learning

Jianshu Hu, Yunpeng Jiang, Paul Weng ICLR, 2024 arXiv We make recommendations on how to exploit data augmentation in image-based DRL in a more principled way. And we include a novel regularization term called tangent prop in RL training. |

|

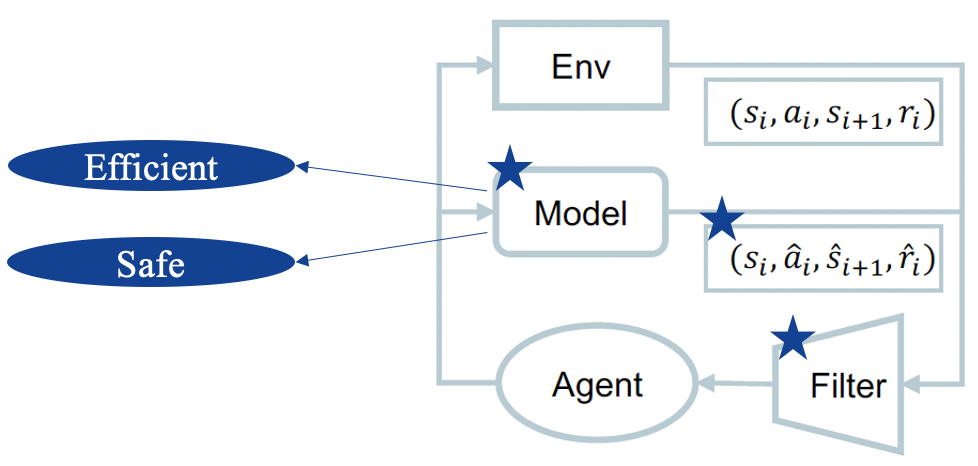

Solving Complex Manipulation Tasks with Model-Assisted Model-Free Reinforcement Learning

Jianshu Hu, Paul Weng CoRL, 2022 paper link A novel deep reinforcement learning approach for improving the sample efficiency of a model-free actor-critic method by using a learned model to encourage exploration. |

|

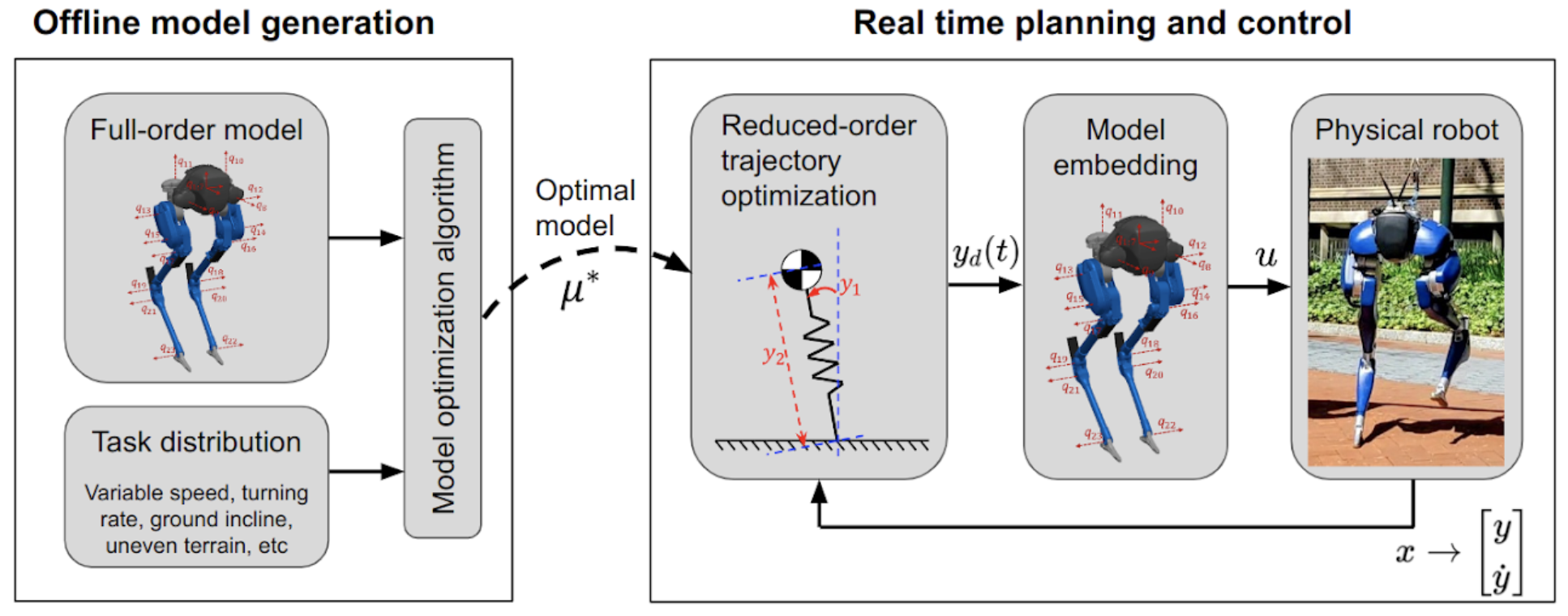

Beyond Inverted Pendulums: Task-Optimal Simple Models of Legged Locomotion

Yu-Ming Chen, Jianshu Hu, Michael Posa T-RO arXiv We propose a model optimization algorithm that automatically synthesizes reduced-order models. |

Miscellanea |

|

Conference Reviewer: ICLR, MICCAI

Journal Reviewer: TNNLS, RA-L |

|

This website is build on this source code. Thanks to Jon Barron. |